-

Mobile Version

Scan with Mobile

- Member Center

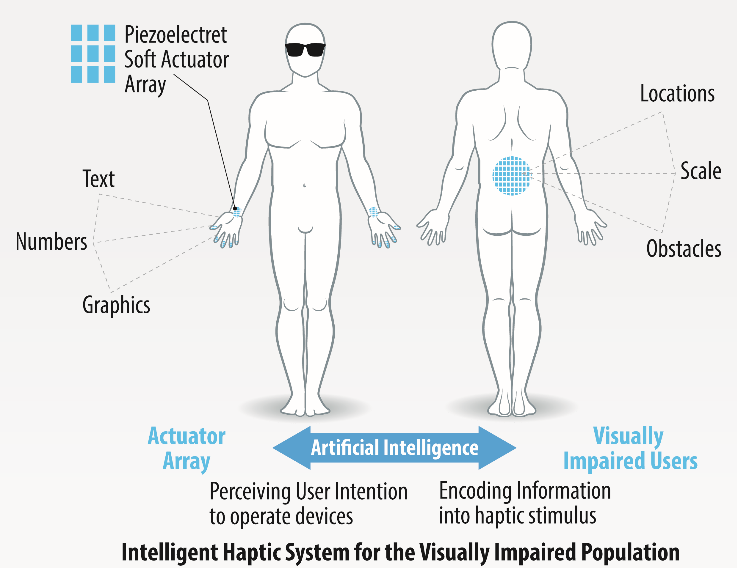

According to the World Report on Vision by WHO in 2019, there are over 2.2 billion people who suffer vision impairments or blindness worldwide. While there has been tremendous progress in medical science, rehabilitation technology, health promotion and policymaking over the past several decades, these efforts are hardly keeping up with the increasing population with visual impairments and blindness. Although haptic devices have long been used to help the visually impaired population to live and work, they are not widely adopted due to limited functionalities and high costs.

There is an urgent need to develop intelligent and affordable haptic systems for the visually impaired population to perceive their surroundings, obtain information and communicate with their electronic devices. One of the challenges for such a system is that understanding real-time user intention from body movement requires large computational capability. In this project, our lab will focus on combining haptic actuators and AI to design intelligent and affordable haptic systems that could function as vision substitutes for the visually impaired population. Specifically, we will study how to efficiently train the deep learning neural network for user intention perception based on sensor array input and how to deploy the trained network with electrical or optical chips for ultra-fast data processing.

> Yma@polyu.edu.hk

> Department of Mechanical Engineering

> Research Institute for Intelligent Wearable Systems (RI-IWEAR)

> FG 604, The Hong Kong Polytechnic University,

> Hung Hom, Kowloon, HK, P.R.C

> (+852) 2766 7823